A Guide to Structured Generation Using Constrained Decoding

Introduction

We often want specific outputs when interacting with generative language models. This is especially true in programming domains, where a generated output may become a direct input to a function. But sometimes, no matter how explicit you are with your instructions, generative language models will get too creative, deviate off task, or simply succumb to their urge to yap.

Fortunately, there are techniques that ensure language models only return outputs that conform to your requirements. This article serves as a practitioner's guide for perhaps the most powerful of these techniques: constrained decoding. We'll cover what structured generation and constrained decoding are, how they work, best practices, useful patterns, and pitfalls to avoid.

Note to reader

This article is a living document being continuously updated as the field evolves. Terminology such as "structured generation" and "constrained decoding" do not have consensus definitions, and their scopes are evolving over time — the discussion here reflects the author's perspective and may differ from other texts.

Motivating Example

To illustrate constrained decoding, we'll explore an example use case (inspired by SGLang's docs). We want to generate JSON outputs that represent Harry Potter characters, according to the following schema:

{

"name": "Harry Potter",

"house": "Gryffindor",

"blood status": "Half-blood",

"wand": {

"wood": "Holly wood",

"core": "Phoenix feather",

"length": 11.0

}

}

More context on this will follow, but for now, here's what this might look like without constrained decoding and other structured generation techniques using the SGLang framework:

import sglang as sgl

@sgl.function

def harry_potter_gen(s, name):

s += sgl.user(f"Using JSON, describe the character {name} from Harry Potter.")

s += sgl.assistant(sgl.gen("json", max_tokens=256))

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = harry_potter_gen.run("Hermione Granger")

print(state["json"])

# >>> Sure. Here's the character description based on JSON:

# >>>

# >>> ```json

# >>> {

# >>> "name": "Hermione Granger",

# >>> "age": 15,

# >>> "species": "Human",

# >>> "nationality": "British",

# >>> "gender": "Female",

# >>> "house": "Hufflepuff",

# >>> "personality": "Intelligent, bookish, and compassionate",

# >>> "status": "Alive",

# >>> "birth_date": "1991-01-03",

# >>> "death_date": null

# >>> }

# >>> ```

We can see that the generative language model does a fine job of describing Hermione in JSON format. However, the JSON does not conform to our desired schema. It is also accompanied by unwanted commentary that makes this output inconvenient to parse for further processing.

This article will demonstrate how constrained decoding avoids these issues.

What Is Structured Generation?

In the context of generative language models, structured generation encompasses a range of techniques that aim to generate outputs with a desired structure.

Structured output could take any form depending on the user's requirements, but the archetypal example would be a JSON object with a specific schema. Another example of structured output could be strings that match a regex pattern, such as an email address, a telephone number, or even just a simple "Y" or "N". Combining these ideas, a common structured generation goal could be to output a JSON object whose keys conform to a desired schema and whose values are consistent with expected data types, enumerations, and regex patterns.

Another variation on structured generation are context-free grammars, which are used to generate outputs that follow sets of rules that ensure validity (e.g., to form a working SQL query).

Structured generation can be achieved in various ways, including:

- Prompt design: the simplest approach is to include a description of the desired output structure inside the prompt. This could also involve few-shot prompting, whereby examples of inputs and outputs are included in the prompt.

- Fine-tuning: a model can be subjected to further training for a specialised task using input-output pairs that demonstrate the desired output structure. This will incline the model to generate similarly-shaped responses during inference.

- Multi-stage prompting: rather than have the model directly generate structured output, you can have the model respond to a series of prompts, and then assemble the structured output yourself outside of the generative process.

- Specialised services: OpenAI offers an optional JSON mode that ensures API responses are returned as valid JSON, although it doesn't provide strong guarantees about the schema and contents of the JSON.

These techniques work with varying degrees of success, depending on the difficulty of the task and the capability of the model. However, there's a more forceful method that can guarantee precise outputs, even when working with relatively weak models applied to complex tasks: constrained decoding.

What Is Constrained Decoding and How Does It Work?

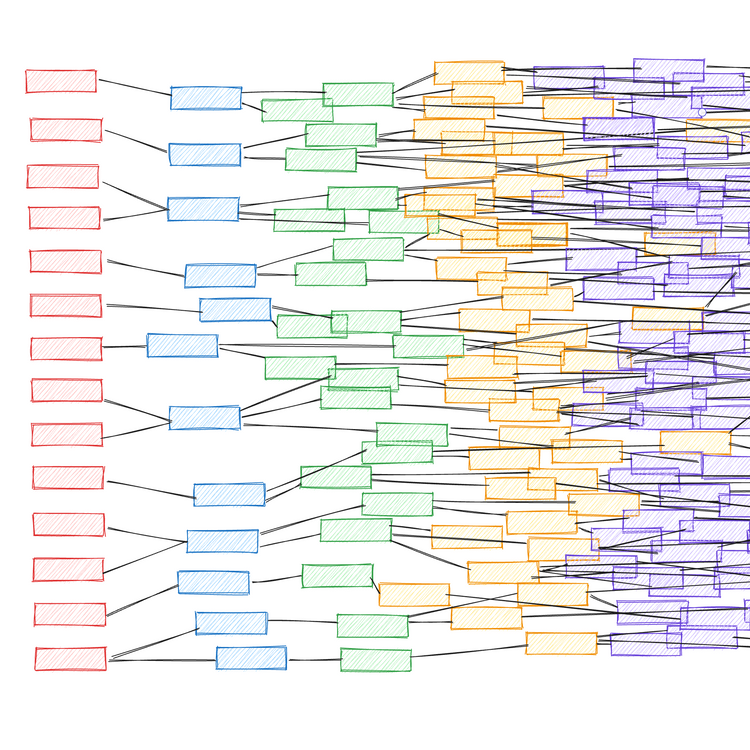

In the context of structured generation, constrained decoding is a technique that manipulates a generative model's token generation process to constrain its next-token predictions to only tokens that do not violate the required output structure.

State of the art constrained decoding skips the parts of the structured output that are boilerplate scaffolding or tokens that can be uniquely determined based on preceding tokens and the constraints of the desired output. Only the parts of the output that strictly require generation are sampled from a restricted set of compatible tokens in the model's next-token probability distribution.

For a full exploration of the mechanics behind constrained decoding, I refer the reader to excellent articles and papers from the teams behind Outlines [1] [2] and SGLang [3]. Constrained decoding is an area of active innovation that continues to benefit from increasingly effective optimisations.

Additional benefits of constrained decoding

As well as guaranteeing compliant outputs, the mechanics of constrained decoding outlined above can also reduce inference costs and improve throughput by:

- Increasing token-generation speed. Constrained decoding simplifies the next-token prediction space, accelerating generation — especially when implemented with clever optimisations that allow some token generation steps to be outright skipped.

- Reducing the number of generated tokens. The throughput of text generation systems is almost always bottlenecked by the speed of token generation, and for many structured generation tasks, much of the output is scaffolding that can bypass generation. For instance, for a rigid JSON schema with fixed field definitions, we can save a lot of time by only generating the values and not the surrounding boilerplate.

There's even precedent suggesting that constrained decoding can improve task performance.

How to Use Constrained Decoding

External APIs may offer some structured generation functionality, such as Open AI's JSON mode, but at the time of writing, I'm not aware of any that support full-fledged constrained decoding.

There are various frameworks that enable constrained decoding to be leveraged with local models, including: SGLang, Outlines, guidance, and DSPy Assertions. In this article, I elect to use SGLang (which builds on Outlines under the hood) to illustrate examples, although the same concepts apply across all frameworks.

These frameworks are generally pretty agnostic towards the local model used, and will usually be compatible with most popular models. In this article, any outputs that accompany the examples have been generated using google/gemma-2b-it.

There are three main ways that a structured output can be defined for constrained decoding: regex, code, and generative constructs.

Structured output using regex

Regular expressions (regex) are a powerful way to define structured output, as they offer maximum specificity over the format and contents. Here's how regex can be used in SGLang for constrained decoding:

import sglang as sgl

character_regex = (

r"""\{

"name": "[\w\d\s]{1,16}",

"house": "(Gryffindor|Slytherin|Ravenclaw|Hufflepuff)",

"blood status": "(Pure-blood|Half-blood|Muggle-born)",

"wand": \{

"wood": "[\w\d\s]{1,16}",

"core": "[\w\d\s]{1,16}",

"length": [0-9]{1,2}\.[0-9]{0,2}

\}

\}"""

)

@sgl.function

def harry_potter_gen(s, name):

s += sgl.user(f"Please describe the character {name} from Harry Potter.")

s += sgl.assistant(sgl.gen("json", max_tokens=256, regex=character_regex))

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = harry_potter_gen.run("Hermione Granger")

character_json = state["json"]

The main downside of regex is that it's tedious to write and maintain — especially in the context of an active codebase with an evolving data model. Another downside to regex in SGLang is that it introduces a considerable compilation time when initialising run that is not encountered when using the specialised generative constructs.

Depending on the framework, regex may produce different outputs to other generative methods due to algorithmic differences for token selection (more on this in the pitfalls section).

Structured output as code

Structured output can also be defined as code using Pydantic models. These can then be dynamically converted into regex for constrained decoding. Using Pydantic models like this is convenient, as it ensures alignment between your application code and the constrained decoding process. To fully streamline your application, you can also use string interpolation to describe the desired output structure in your instruction prompt. This is a major benefit over alternative approaches, where you may need to reimplement your data model in multiple places in multiple ways, risking code drift. Pydantic models can be used within SGLang like so:

import sglang as sgl

from sglang.srt.constrained import build_regex_from_object

from pydantic import BaseModel

class Wand(BaseModel):

wood: str

core: str

length: float

class Character(BaseModel):

name: str

house: str

blood_status: str

wand: Wand

@sgl.function

def harry_potter_gen(s, name):

s += sgl.user(f"Please describe the character {name} from Harry Potter.")

s += sgl.assistant(

sgl.gen("json", max_tokens=256, regex=build_regex_from_object(Character))

)

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = harry_potter_gen.run("Hermione Granger")

character_json = state["json"]

Defining structured output using Pydantic models suffers from the same downsides as regex, as this is what they get converted into under the hood.

Whatsmore, the conversion process for complex Pydantic models involving multiple levels of models can be unreliable or yield poorly constructed regex, often rendering this approach unviable in practice. Case in point: the above example does not actually work, as the conversion is invalid, resulting in malformed outputs. This is a bug in the underlying Outlines package which will likely get resolved in SGLang soon.

Structured output using interleaved generative constructs

The third option is to define your structured output as alternating static strings and generative constructs. This has the benefit of splitting out your data model into more manageable components where the generative parts of the task can be defined individually. In SGLang, the task performance of the gen constructs using functionality such as choices can be superior to their regex equivalents due to algorithmic differences in their implementations (more on this in the pitfalls section).

The guidance package provides an illustrative example of this pattern. For our Harry Potter task, free text values for fields such as "name" can either be constrained by gen using regex or by using the stop='"' mechanic to escape the token generation process (without stop='"', the model would continue to predict the rest of the JSON without constraints). Here's how generative constructs can be used in SGLang:

import sglang as sgl

@sgl.function

def harry_potter_gen(s, name):

s += sgl.user(f"Please describe the character {name} from Harry Potter.")

s += sgl.assistant('''{

"name": "''' + sgl.gen("name", max_tokens=32, stop='"') + '''",

"house": "''' + sgl.gen(

"house", choices=["Gryffindor", "Slytherin", "Ravenclaw", "Hufflepuff"]

) + '''",

"blood status": "''' + sgl.gen(

"blood status", choices=["Pure-blood", "Half-blood", "Muggle-born"]

) + '''",

"wand": {

"wood": "''' + sgl.gen("wood", regex=r"[\w\d\s]{1,16}") + '''",

"core": "''' + sgl.gen("core", regex=r"[\w\d\s]{1,16}") + '''",

"length": ''' + sgl.gen("length", regex=r"[0-9]{1,2}\.[0-9]{0,2}") + '''

}

}''')

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = harry_potter_gen.run("Hermione Granger")

character_json = state.messages()[1]["content"]

Depending on the framework and implementation, managing the scaffolding around the generative constructs can be syntactically awkward. In this SGLang example, we rely on a finickety mix of single and triple quotes. We also have to access the final JSON output in a more opaque way via state.messages()[1]["content"] rather than state["json"] as per the previous approaches, which is fragile when actively experimenting with the prompting strategy.

What Are the Pitfalls?

Remember: the model does not know the constraints in advance

Most constrained decoding pitfalls are due to misalignment between what the generative model wants to output and what the model is forced to output. The mechanics of next-token prediction and constrained decoding are such that the model does not know what is coming in advance: it generates predictions only based on the tokens that precede it.

This can result in poor performance if the model is forced to output something unnatural. For instance, in this example, we observe unexpected behaviour if the model is constrained to output country options that start with a lowercase letter:

import sglang as sgl

@sgl.function

def which_country_upper(s, city):

s += sgl.user(f"In which country is {city} located?")

s += sgl.assistant(sgl.gen("country", choices=["France", "Spain", "Italy"]))

@sgl.function

def which_country_lower(s, city):

s += sgl.user(f"In which country is {city} located?")

s += sgl.assistant(sgl.gen("country", choices=["france", "spain", "italy"]))

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state_upper = which_country_upper.run("Paris")

state_lower = which_country_lower.run("Paris")

print(state_upper["country"]) # >>> France

print(state_lower["country"]) # >>> spain

The best way to mitigate this is to ensure the model understands the task and expectations by providing sufficient information in the prompt. If working with JSON, it's also worth considering the ordering of your schema fields, and structuring them logically such that they're amenable to next-token prediction. This may also include using descriptive field names, and not constraining the model to esoteric field values.

Inspecting the token probabilities is a useful debugging technique to identify cases where the model has been forced to output something with low confidence. With SGLang, you can retrieve the logprobs for each choice option like this: state.get_meta_info("house")["normalized_prompt_logprobs"].

Beware greedy token selection

Another pitfall to be mindful of is how your constrained decoding is being implemented algorithmically. This will vary depending on the framework and constructs you're using. For instance, in SGLang, constrained decoding for a multiple-choice string is different when configured as regex versus gen's choices argument. The former uses a greedy next-token prediction, whereas the latter evaluates all options completely. Other libraries may use variations on beam search. We can see how this can go wrong in the following example:

import sglang as sgl

@sgl.function

def us_president_choices(s):

s += sgl.user("Name a US president.")

s += sgl.assistant(

"An example of a US president is " +

sgl.gen("president", choices=["Donald Duck", "Millard Fillmore"])

)

@sgl.function

def us_president_regex(s):

s += sgl.user("Name a US president.")

s += sgl.assistant(

"An example of a US president is " +

sgl.gen("president", regex=r"(Donald Duck|Millard Fillmore)")

)

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state_choices = us_president_choices.run()

state_regex = us_president_regex.run()

print(state_choices["president"]) # >>> Millard Fillmore

print(state_regex["president"]) # >>> Donald Duck

The greedy algorithm used by the regex implementation is short-sighted, and cannot resist choosing the "Donald" option, despite ultimately landing on an incorrect answer. The choices approach avoids this by evaluating the quality of all options in their entirety, and not just based on the most attractive initial token.

What Are Some Useful Tips and Patterns?

How to generate a varying number of structured outputs

A tricky but not uncommon structured generation requirement is to generate zero, one, or many outputs that adhere to constraints, from a single input. Specifying such constraints in regex is sometimes theoretically possible, but usually ill-advised in practice for all but the most simple string patterns. A better approach is to use control flow to enable the model to generate multiple structured outputs in a series of responses. Here's a pattern for this in SGLang:

import sglang as sgl

@sgl.function

def harry_potter_gen(s, names, max_characters=5):

s += sgl.user(f"Describe these Harry Potter characters: {', '.join(names)}")

n = 1

while n <= max_characters:

s += sgl.assistant('''{

"name": "''' + sgl.gen("name", max_tokens=32, stop='"') + '''",

"house": "''' + sgl.gen(

"house", choices=["Gryffindor", "Slytherin", "Ravenclaw", "Hufflepuff"]

) + '''",

"blood status": "''' + sgl.gen(

"blood status", choices=["Pure-blood", "Half-blood", "Muggle-born"]

) + '''",

"wand": {

"wood": "''' + sgl.gen("wood", max_tokens=32, stop='"') + '''",

"core": "''' + sgl.gen("core", max_tokens=32, stop='"') + '''",

"length": ''' + sgl.gen("length", regex=r"[0-9]{1,2}\.[0-9]{0,2}") + '''

}

}''')

s += sgl.user("Are there any more characters to describe? (Y/N)")

s += sgl.assistant(sgl.gen(f"continue_{n}", choices=["Y", "N"]))

if s[f"continue_{n}"] == "N":

break

n += 1

s += sgl.user("OK, describe the next character.")

s["n"] = min(n, max_characters)

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = harry_potter_gen.run(["Ron Weasley", "Snape"])

characters_json = [state.messages()[1+i*4]["content"] for i in range(state["n"])]

Another strategy for generating one-or-many type outputs is to "seed" the first element in an array with constrained decoding, and then have an unconstrained generative function for subsequent elements (with a suitable stop criterion). The assumption is that the model will be smart enough to continue the pattern established by the first element. This can work reliably for straightforward substructures, such as simple array fields within a larger JSON object.

Post-processing of structured outputs

There are scenarios where you can achieve better task performance by defining intermediary structured outputs for constrained decoding, and then converting this to your final structured output during post-processing.

This can be the case when using enumerations that the model handles poorly. This can be mitigated by temporarily adding more intuitive aliases. Here's a contrived example (that would be better avoided using gen's choices instead of regex):

import sglang as sgl

@sgl.function

def grass(s):

s += sgl.user(f"What colour is grass?")

s += sgl.assistant(sgl.gen("colour", regex=f"(Grey|Forest green)"))

colours = {"Grey": "Grey", "Forest green": "Forest green", "Green": "Forest green"}

@sgl.function

def grass_aliases(s):

s += sgl.user(f"What colour is grass?")

s += sgl.assistant(sgl.gen("colour", regex=f"(Grey|Forest green|Green)"))

sgl.set_default_backend(sgl.RuntimeEndpoint("http://localhost:30000"))

state = grass.run()

state_aliases = grass_aliases.run()

print(state["colour"]) # >>> Grey

print(colours[state_aliases["colour"]]) # >>> Forest green

Another case where post-processing can be useful is for JSON schemas that contain optional fields. Rather than use complex regex to specify the optional fields, it's usually easiest to include all fields in the structured output and have the generative model populate unneeded field values with placeholders. These fields can then be deleted during post-processing. Even for expansive JSON schemas with many optional fields, this is inexpensive with constrained decoding as this JSON scaffolding does not actually get generated.

Advanced Constrained Decoding: Context-Free Grammars

Context-free grammars (CFG) are a tool in computational linguistics for describing the syntax of languages. Put simply, CFGs define rule sets that specify how strings can be constructed. CFGs can be thought of as a more powerful and expressive form of regex, that can handle complications like nested and recursive structures, and tasks like balancing parentheses. This makes CFGs a useful tool for complex structured generation tasks such as elaborate JSON objects, that can bypass some of the hackery shown previously when working with simple generative constructs.

SGLang does not support context-free grammars, but llama.cpp does. The code snippet below shows how an array of harry potter character JSON can be defined in llama.cpp's required format, GBNF (a variant of Backus-Naur form).

from llama_cpp.llama import LlamaGrammar

HARRY_POTTER_GBNF = r"""

ws ::= ([ \t\n] ws)?

string ::=

"\"" (

[^"\\\x7F\x00-\x1F] |

"\\" (["\\/bfnrt] | "u" [0-9a-fA-F] [0-9a-fA-F] [0-9a-fA-F] [0-9a-fA-F])

)* "\""

digit ::= "0" | "1" | "2" | "3" | "4" | "5" | "6" | "7" | "8" | "9"

one-or-two-digits ::= digit | digit digit

zero-to-two-digits ::= "" | digit | digit digit

house ::= "\"Gryffindor\"" | "\"Hufflepuff\"" | "\"Ravenclaw\"" | "\"Slytherin\""

blood ::= "\"Muggle-born\"" | "\"Half-blood\"" | "\"Pure-blood\""

wand ::= (

"{\n" ws

"\"wood\": " string "," ws

"\"core\": " string "," ws

"\"length\": " one-or-two-digits "." zero-to-two-digits ws

"}"

)

character ::= (

"{\n" ws

"\"name\": " string "," ws

"\"house\": " house "," ws

"\"blood status\": " blood "," ws

"\"wand\": " wand ws

"}"

)

root ::= "[\n " character (",\n" ws character)* ws "]"

"""

grammar = LlamaGrammar.from_string(HARRY_POTTER_GBNF, verbose=False)

When run, we can see that this can successfully handle generating zero-or-one-or-many type outputs in a single conversation turn.

from llama_cpp.llama import Llama

llm = Llama(

model_path="/Users/aidan/models/Meta-Llama-3-8B-Instruct.Q4_0.gguf",

n_gpu_layers=-1,

verbose=False,

)

output = llm.create_chat_completion(

messages=[

{

"role": "user",

"content": "Using JSON, describe these Harry Potter characters: "

+ "Ron Weasley, Snape.",

},

],

grammar=grammar,

)

print(output["choices"][0]["message"]["content"])

# >>> [

# >>> {

# >>> "name": "Ronald Bilius Weasley",

# >>> "house": "Gryffindor",

# >>> "blood status": "Pure-blood",

# >>> "wand": {

# >>> "wood": "Holly",

# >>> "core": "Veela hair",

# >>> "length": 10.5

# >>> }

# >>> },

# >>> {

# >>> "name": "Severus Tobias Snape",

# >>> "house": "Slytherin",

# >>> "blood status": "Half-blood",

# >>> "wand": {

# >>> "wood": "Blackthorn",

# >>> "core": "Unicorn hair",

# >>> "length": 11.75

# >>> }

# >>> }

# >>> ]

If working with GBNF syntax doesn't appeal to you, guidance is another good option that supports context-free grammars via a purely pythonic interface.

Conclusion

Constrained decoding is a powerful but underutilised technique for structured generation. It's a significant advantage that local models have over external APIs, and can enable relatively small and inexpensive models to perform comparably to much larger alternatives.

The throughput and latency improvements that constrained decoding provide can also be extremely compelling. In my experience, SGLang has particularly impressive backend optimisations that increases the speed of JSON generation by an order of magnitude versus unconstrained equivalents (whilst also guaranteeing well-formed outputs...!).

Constrained decoding is a vital part of the structured generation toolkit that I encourage the reader to try for any tasks where e a reliable output structure would be beneficial.

Code for this analysis can be found on GitHub

Member discussion