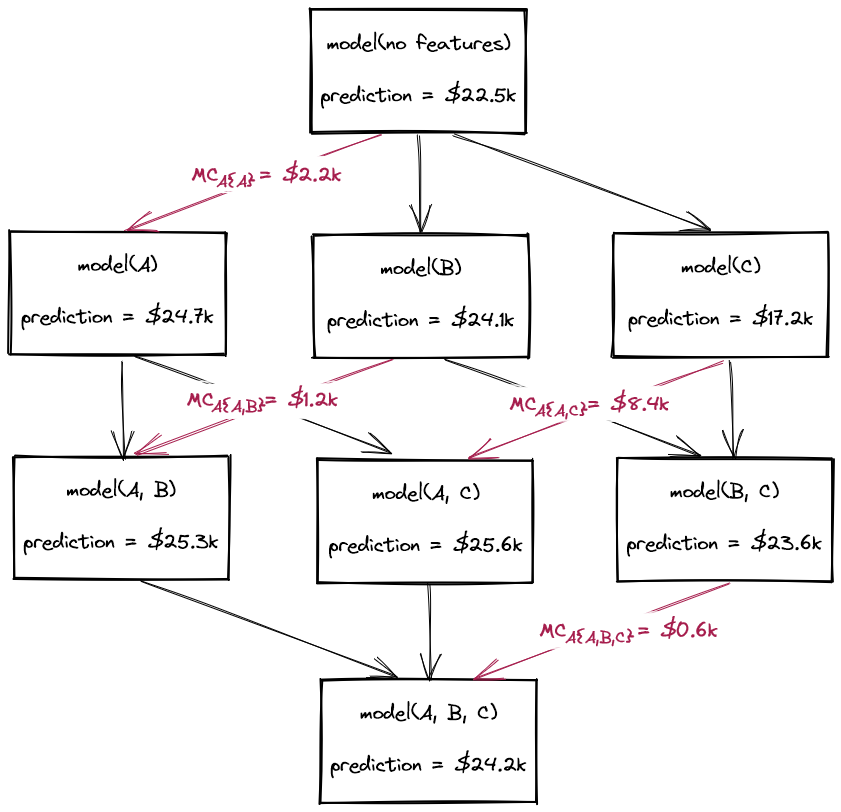

Approximating Shapley Values for Machine Learning

The how and why of Shapley value approximation, explained in code

How Shapley Values Work

In this article, we will explore how Shapley values work - not using cryptic formulae, but by way of code and simplified explanations

Supervised Clustering: How to Use SHAP Values for Better Cluster Analysis

Cluster analysis is a popular method for identifying subgroups within a population, but the results are often challenging to interpret

Explaining Machine Learning Models: A Non-Technical Guide to Interpreting SHAP Analyses

With interpretability becoming an increasingly important requirement for machine learning projects, there's a growing need for the complex outputs of techniques such as SHAP to be communicated to non-technical stakeholders.